If you’re creating AI-generated images for your marketing or creative assets (or just for fun), you’ve likely come across both Midjourney and DALL-E 3. But which one is better for creators to use, and which tool’s images appeal more to audiences?

In this article, we’ll dive into the pros and cons of Midjourney and DALL-E, test their capabilities, ask an audience which images they prefer, and give our overall recommendation on which tool is better.

First, a quick overview of how AI image generation works.

How AI image generation works

At a basic level, AI (also called machine learning) is a set of programming that uses mathematical formulas and algorithms to “learn” and extract patterns based on data you feed it. For example, if you show a computer a bunch of images of a car and put the word “car” next to them, the computer will learn to associate the images with the text.

AI image generation is an evolution of the association example we mentioned above. Once a computer can recognize that the word “car” belongs with the image of a car, it can also recognize the opposite: that the image of a car belongs with the word. Add in some natural language processing capabilities to the code, and you can write “show me a car” – and it will.

DALL-E 3 vs Midjourney

DALL-E 3 is powered by OpenAI and ChatGPT, a powerful text interpretation and search tool. It’s underpinned by the GPT-3 algorithm and programming, and its strength is in interpreting text prompts accurately and naturally.

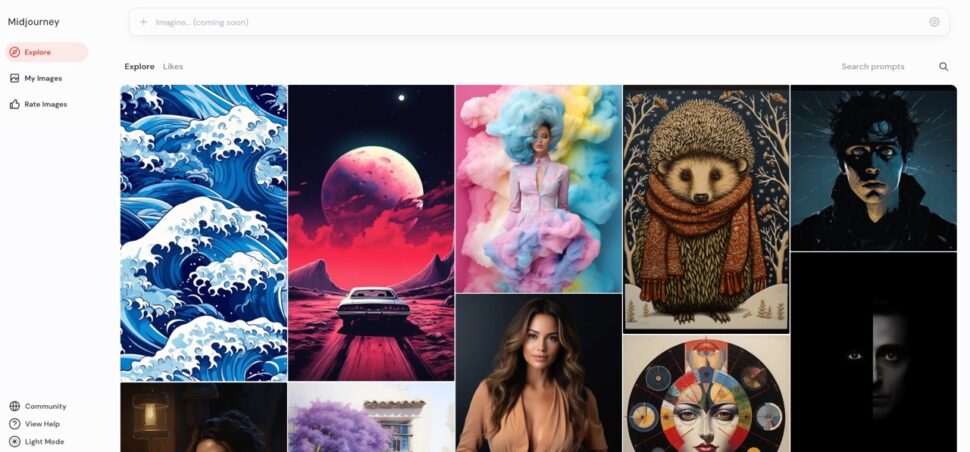

Midjourney is one of the original and most powerful artificial intelligence (AI) image generation tools available. While it’s also a text-to-image tool, Midjourney has been trained on millions upon millions of art from a wide variety of genres. Its specialty is in creating rich, vivid, high-quality images.

In a previous blog, we compared DALL-E 3 to Stable Diffusion, another AI image generator, and DALL-E 3 came out on top. Now, we’re putting it up against Midjourney.

How DALL-E 3 and Midjourney use AI to train

Both DALL-E 3 and Midjourney use a method of AI image generation training called diffusion. In diffusion, AI is trained not only with image-word associations, but also by having the AI break down the images it’s being trained on until there is nothing but random noise.

Imagine blurring an image to the point that it’s basically just random static on the page. That’s what AI image generators do to millions or even billions of images during the training process. If you feed millions of images into a computer and have the machine learning tool break those down into nothing but noise, you can then reverse the noise to recreate the images.

Diffusion in process

When giving the same program a slightly different set of blurry background “noise” but the same prompts, it will create a slightly different image. These steps, done over and over with different prompts and different starting inputs, create the many different variations of AI-generated images that are possible.

Diffusion complete

Let’s dive into the pros and cons of each tool.

Pros of DALL-E 3

Just like OpenAI’s more famous ChatGPT product, DALL-E 3 understands natural patterns of speech. It excels at faithfully producing the desired content from broad, vague, or short text prompts. DALL-E 3 is also extremely fast at producing images, often three to four times faster than other tools under the same conditions.

Other pros of DALL-E 3 include:

Ease of use

New users to AI tools will find DALL-E 3 easy to use right off the bat – just type in your prompt, hit generate, and get a handful of images almost immediately.

You can also upload your own images and make changes using inpainting or outpainting tools. Inpainting lets you change sections of your existing image using prompts, while outpainting lets you expand the canvas and add more to it with prompts.

Availability

Users can access DALL-E 3 through the OpenAI suite, via ChatGPT, or directly from DALL-E 3’s own website. Meanwhile, Midjourney users can only access the tool through Discord by typing the relevant text command, which is much less straightforward.

Per-image pricing

DALL-E’s pricing is straightforward: $15 buys 115 credits, which you can use to enter 115 image prompts. Each prompt returns four pictures as a result, so each image you generate costs about 3 cents. This pricing structure makes it easy for users to plan and budget.

Image ownership

OpenAI states on its website: “You own the images you create with DALL·E, including the right to reprint, sell, and merchandise – regardless of whether an image was generated through a free or paid credit.” The actual legal rights and use of AI-generated images goes beyond simple ownership (see our caveat about this at the end of this blog) but it’s a positive that DALL-E 3 releases images created with the tool to the user.

Meanwhile, free Midjourney users are “licensed” the images they create and can use them for personal and non-profit activities, but not commercial – they’ll need to upgrade to a paid plan to own and use the images commercially. (You can view Midjourney’s full terms here).

Pros of MidJourney

Midjourney creates stunning images and almost photo-real art thanks to its history of training with real images as well as art in a multitude of styles. Midjourney was at one stage rumored to be based on the Stable Diffusion algorithm, but that has since been debunked. The reason for that is the similarities in style choices available with the tool.

Midjourney users appreciate the detail-rich and beautifully constructed art it generates.

Other pros of Midjourney include:

Image manipulation

Once you get into Midjourney through Discord, you have a decent amount of control over the artwork you generate. You can play around with filters and effects to tweak the generated images to your wants and needs.

Subscription-based pricing

Midjourney’s pricing plans are divided into three tiers: Basic, Standard, Pro, and Mega. You can subscribe to them monthly or annually, and all but the Basic plan offer unlimited time on the servers. The higher tiers offer faster processing on a high-speed server. This subscription pricing makes it easier for users to set it and forget it – and you don’t need to top up credits to complete projects, like you do on DALL-E 3.

Field testing images created by Midjourney vs DALL-E 3

Now, it’s time to put these generative AI tools to the test against real people. We used PickFu’s polling platform to ask an audience for feedback on images generated by DALL-E 3 and Midjourney, and evaluated the tools based on:

- Photo-realistic imagery

- Text prompt recognition and understanding

- Image quality

- Image appeal (did the audience like it?)

Here’s how the tests went.

Test 1: Utah sunset

The first test used the AI prompt: “A realistic landscape of the Utah desert at sunset in fall.” In our previous comparison between DALL-E 3 and Stable Diffusion, respondents preferred the realism of the Dall-E generated image by a large margin, citing its realism and richness.

In another comparison, Midjourney vs. Stable Diffusion – Midjourney performed fantastic as well.

It’s a credit to Midjourney that it dominated the test for the same reasons, taking the richness and realism of the AI-generated photo to a new level.

Audience responses to Midjourney’s image included:

- “This one looks like an actual photograph and is much more realistic version!”

- “This feels more accurate to Utah and captures more of the sky and ground.”

- “The scenery is more realistic, fitting, and visually detailed.”

Here’s how PickFu’s AI natural language processor summed up the responses:

“Respondents consistently mentioned their preference for images that looked more like photographs rather than computer-generated artwork. They also emphasized the importance of accurately depicting Utah’s terrain features such as canyons and rock formations in order to reflect an authentic desert landscape. Lastly, respondents considered it crucial for the images to capture the colors and atmosphere associated with fall through elements like foliage coloration and sunset lighting.”

While both DALL-E 3 and Midjourney successfully interpreted the text prompt and produced vivid images, Midjourney excelled in photo-realism and in generating an image our panel found beautiful and engaging.

Test 2: motorcycle race

This test prompted both AI art programs to create an image of “a motorcycle race with three different color motorcycles.” In our previous test against Stable Diffusion, DALL-E 3 was the winner with the audience, and it won again here.

A panel of 100 people (selected from PickFu’s panel of 15 million globally) returned the result within a few hours and gave the victory to DALL-E 3, 70 votes to 30. We may have changed this result by selecting special interest groups to weigh in, perhaps by only asking motorcyclists or people with an interest in motorcycle racing to answer the poll. The quick survey using a broad audience gave us an immediate and clear winner though.

Respondents found the DALL-E 3 image more realistic than the artistic, almost impressionist interpretation from Midjourney. This is a good example of how prompt engineering can affect the outcome. Without the word “realistic” in the prompt, Midjourney opted for a more artful rendition of the scene.

- The panelists’ feedback included:

“I like the bikes leaning into a turn. It feels like more of an action shot and draws more of an emotional response from me.” - “This one looks more like racing, especially with the tilt on the bikes. It looks much more realistic.”

- “The motorcycles are more distinctly different colors and it feels like they’re in a competitive race.”

While DALL-E 3’s image looked more realistic, some fans of Option B pointed out that the more abstract style made it feel like an actual painting: “The watercolor painting looking one is really stylish and is a much more attractive than the other one that looks a little computer generated.”

Field test results

Our field test resulted in a tie, but it did highlight core strengths for each AI image tool.

DALL-E 3 is powerful when it comes to its ability to understand and interpret prompts, thanks to its advanced natural-language programming. Meanwhile, Midjourney is excellent at creating vivid images that look as though they could have been created by a human, not just a computer.

Which AI text to image generator should you use?

Both of these AI models let users create images in a variety of art styles. Midjourney images are consistently more realistic and look more like organic, human-created works than those of DALL-E 3, but DALL-E 3 is easier to use—especially for beginners. It’s also better at understanding and interpreting your prompts, and still can come up with very realistic-looking images.

If you want realistic, vibrant AI art, Midjourney is probably the best image generator for you.

However, if you need a tool that is easy to use and consistently interprets textual descriptions well, then DALL-E 3 is a very strong option.

The ethics of AI

While both these image generation models give creators the rights to use the images they build, the real legal status of AI-generated images in corporate use cases isn’t yet established. Some jurisdictions have ruled that AI-generated art cannot be trademarked, for example, which is a problem if you use these tools to build your new logo.

DALL-E 3 also takes steps to protect the likeness of celebrities, politicians, and other famous people by preventing you from creating images with those as a prompt. DALL-E 3 also prevents users creating explicit, violent, and hateful imagery with its tool.

Midjourney requires AI artists to agree to its terms of use, which forbids violent, hateful, and explicit imagery. Users can be kicked off the platform for misusing the tool.

Is it ethical to an image generator to “create a picture of a soup can in the style of Andy Warhol?” Many artists claim that it isn’t and that it infringes on their creative intellectual property. Both tools allow artists to opt-out of the training tool, but most of these and other AI generators have already been “trained” on art from millions of artists, famous and not famous alike.

Using AI image generators undoubtedly removes work from graphic designers and artists. Meanwhile, proponents of the software say this democratizes art and makes it more accessible to more people. The ethics of AI as it replaces human labor is a long and difficult conversation that corporations, societies, and legislators will be engaged in for a long time to come.

Add a human touch to your AI-generated images

AI-generated images can be a great way to help your team spark ideas, streamline asset creation, get feedback on creative direction, and create genuinely unique images for your marketing and products. But it’s essential to use these tools with care and be mindful of how you use your images.

Don’t neglect the importance of adding a one-of-a-kind human touch on AI-generated assets. Asking real people what they think about your images (using a consumer insights tool like PickFu) not only ensures that your audience will like your images, but provides you with specific feedback that you can use to enhance and improve them.

You can sign up for PickFu to run polls and ask for feedback on your AI-generated images.