Artificial intelligence (AI) is more powerful and more integrated into our lives by the day. Modern AI can do everything from monitoring your bank account to making sure your transactions are secure to writing an essay for you.

AI image generation allows people to generate their own artwork and images, often using little more than a prompt and a button click. Say you want a picture of a bunch of cats playing darts. You can type that into an AI-image generator and voila, you have it!

DALL-E 3 and Stable Diffusion are two popular AI image generators. In this article, we’ll cover the pros and cons of each one and share our opinion on which is better overall. First, let’s quickly cover exactly how AI works.

What is artificial intelligence?

AI is also called machine learning. Basically, computers are fed information and algorithmic functions process that information so they can learn and extract patterns. In a simplistic example, you can show a computer a ton of photos of an orange with the text “orange” next to it and then a ton of photos of a banana with “banana” next to it. Eventually, the computer program is able to “recognize” oranges and bananas.

How does AI image generation work?

Image generation is an evolution of the example above. Once a computer can recognize that the word “orange” belongs to the image of an orange, it can also recognize that the image of an orange belongs next to the word. Add in some natural language processing capabilities to the code and you can write “show me an orange” – and it will replicate that image.

DALL-E 3 vs Stable Diffusion

DALL-E 3 and Stable Diffusion are two of the more prevalent AI art generators. You might also have heard of MidJourney (which we’ve written two comparisons on, Midjourney vs. DALL-E 3 and Midjourney vs. Stable Diffusion), but in this piece we’ll focus on the two most user-friendly and commonly used AI models.

OpenAI, the makers of ChatGPT, also created DALL-E 3, which follows on from DALL-E and DALL-E 2 as the third generation of the company’s trained image generator.

Stable Diffusion comes at the task from a slightly different angle. It’s actually a combination of multiple open-source algorithms and models, and you can use it in the DreamStudio app from Stability AI or download it yourself and train your own diffusion model.

How DALL-E 3 and Stable Diffusion use AI to train

Both of these use a method of AI image generation called diffusion. In diffusion, AI is trained not only with image-word associations, but also by having the tool break down the images it’s being trained on until they’re nothing but random noise.

Imagine blurring an image to the point it’s just a random bit of static on the page. That’s what AI image generators do to millions (or even billions) of images during the training process.

Imagine blurring an image to the point it’s just a random bit of static on the page. That’s what AI image generators do to millions (or even billions) of images during the training process.

A trainer might show the Mona Lisa, and add keywords like “da Vinci,” portrait, Louvre, and Renaissance. Then the program will blur that image into nothing. When the program is reversed, the image is restored, and the computer learns the steps to build an image that meets the keywords “da Vinci” and “Renaissance.”

If you give the same program a slightly different set of blurry background “noise”, but the same prompts, it will create a slightly different image. These steps done over and over with different prompts and different starting inputs create the many different variations you can see.

So which of these two systems is better?

Pros of DALL-E 3

DALL-E 3 seems trained to produce more abstract or painting-like images than other tools, and it excels at responding to less detailed or broader prompts – simple and short is better.

The OpenAI team focused on natural language processing, so prompt engineering is less of an issue. That means you can write a natural prompt and Dall-e 3 is highly likely to understand it.

DALL-E is also extremely fast at producing images, often three to four times faster than Stable Diffusion under the same conditions.

Other pros of DALL-E 3 include:

Ease of use

New users to AI tools or art tools in general will find DALL-E 3 the easiest to use right off the bat. Just type in your prompt and hit generate to get a handful of images straight away. You can upload your own images and make changes using inpainting or out-painting tools. Inpainting lets you change sections of your image with new prompts, while out-painting lets you expand the canvas and ad more context with prompts.

In every step, all you do is enter a prompt and hit generate.

Simple pricing

DALL-3 has very simple pricing. $15 buys 115 credits, which you can use to enter 115 image prompts. Each prompt returns four pictures as a result, so it’s about 3 cents per image you generate. This simple formula makes it easy to use.

Image ownership

OpenAI says right on its page that “The images you create with DALL-E 3 are yours to use and you don’t need our permission to reprint, sell or merchandise them.” The actual legal rights and use of AI-generated images goes beyond simple ownership, but it’s a positive that DALL-E 3 releases images created on the tool to the user.

Pros of Stable Diffusion

Stable Diffusion seems to have been trained on more contemporary data sets. It’s also a little more free in its application, especially because you can use it in the potent DreamStudio program, or use it in other third-party apps based on its open source software. You can even download it to your own system and train your own AI generator sets using your own images, logos, or even your own face.

Other pros of Stable Diffusion include:

Versatility and power

Stable Diffusion offers a lot of freedom and access to multiple prompt tools right from the outset. In addition to the inpaint and outpaint tools, you can choose from a set of styles, and you can even select a negative prompt. That’s a prompt for things you don’t want to see in your picture.

Set your own rules

Using Stable Diffusion you can decide how many steps each iteration will take, how many images it will generate for you to select from, and even how much it will “listen” to your prompts.

Cheaper pricing

Stable Diffusion’s pricing is complicated. You pay for image generation with credits, but those credits vary based on how many steps you choose, how you prompt, the size of the image, and how many images you want to generate each time.

Using the base settings though, you can produce 1,000 images for around $10 worth of credits. You can also sign up for 25 free credits — enough for between 5 and 10 images. The low price and the free trial makes Stable Diffusion the clear winner on value.

Field testing images created by Stable Diffusion vs DALL-E 3

We used PickFu’s consumer insights platform to test a set of images produced by each text-to-image model among consumers. These two different image sets tested the model on:

- Photo-realistic imagery

- Text prompt recognition and understanding

- Image quality

We then polled panelists to get their response to the two images created by these generative AI programs.

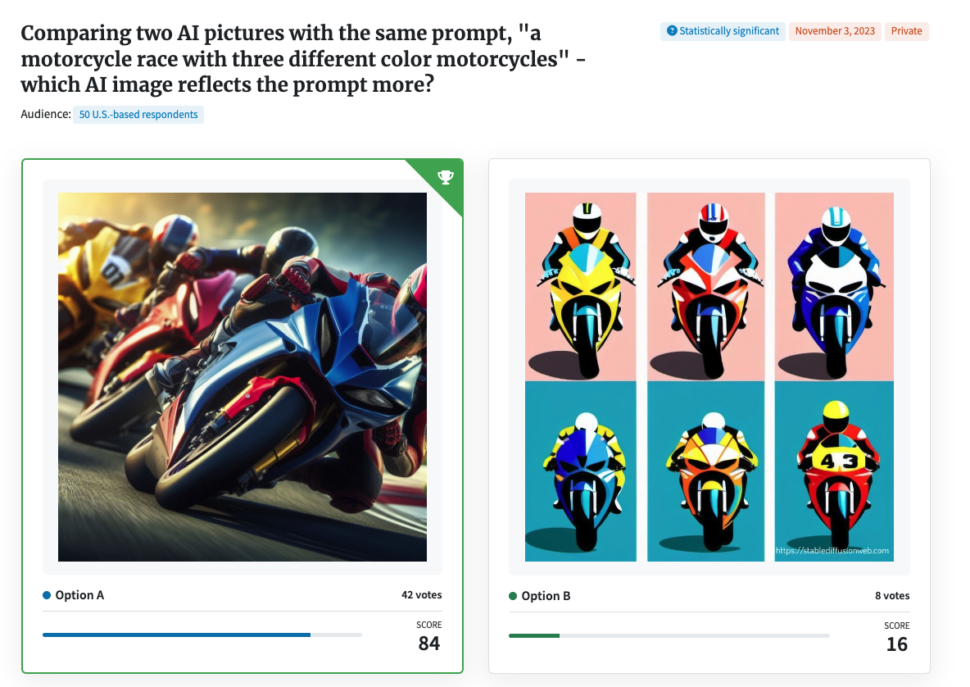

Motorcycle race comparison

First, we asked both Stable Diffusion and DALL-E to generate new images using the text prompt “a motorcycle race with three different color motorcycles.” Then we asked the audience to select which they preferred.

This comparison shows the importance of “prompt building” for Stable Diffusion, and highlighted DALL-E’s ability to read and understand the prompt more naturally. This has a lot to do with OpenAi’s Chatgpt-3 natural language technology that underpins DALL-E.

A quick and easy survey of 100 members from the 15-million strong panel of PickFu survey respondents showed a clear preference for the DALL-E 3 model. PickFu’s natural language AI tools processed the free text responses from this survey and showed that respondents much preferred the more accurate interpretation of this text-to-image program.

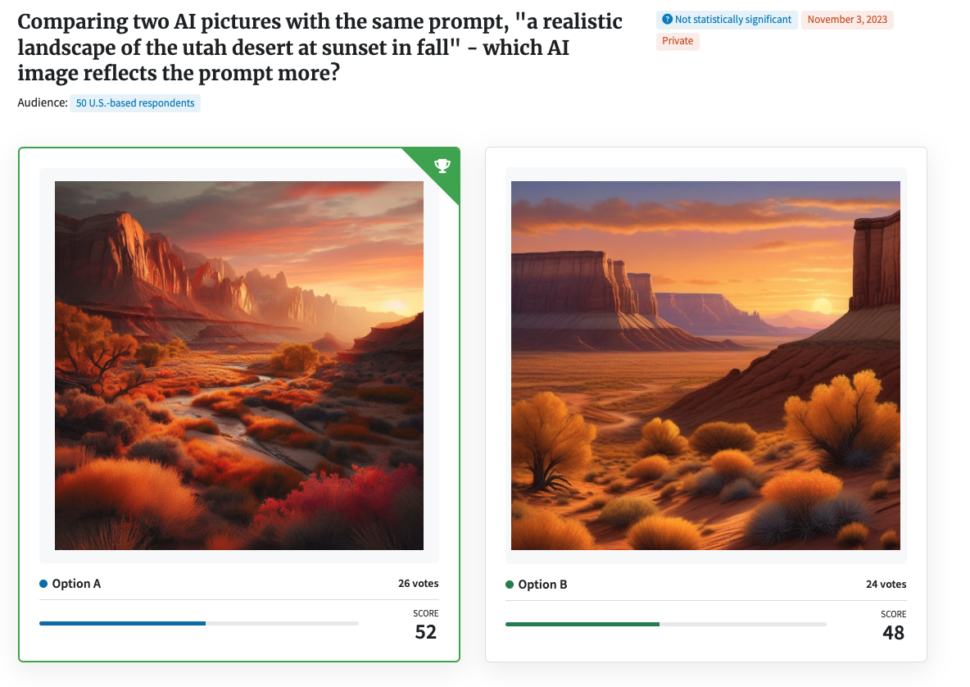

Landscape image comparison

The next prompt called for a “realistic landscape of the Utah desert at sunset in fall.”

Both tools understood this prompt well, but the respondents gave a slight edge to the image created by DALL-E 3. The PickFu AI summary of the poll responses explains why:

“In this survey comparing two AI images of a realistic landscape of the Utah desert at sunset in fall, Option A emerged as the winning option with 26 votes, while Option B received 24 votes. The characteristics that set Option A apart and contributed to its victory were its more realistic vegetation, varied and artfully blurred cliffs, and the use of oranges and reds to emphasize the seasonal aspect of fall. Respondents noted that Option B had unrealistic vegetation, identical cliffs, and a cartoonish feel due to exaggerated colors. Overall, respondents perceived Option A as more detailed, bold, inviting, varied in terms of landscape elements such as vegetation and cliffs.

The top three topics that emerged across all options were realism/authenticity (mentioned by both supporters and detractors), representation of Utah’s landscape features (such as mountains/cliffs), and adherence to the prompt’s specific requirements (fall colors). Respondents consistently commented on their perception of realism in terms of vegetation accuracy or believability. Additionally, they compared both options against their personal experiences or knowledge about Utah’s landscapes. Finally, many respondents considered how well each option aligned with the given prompt by evaluating factors like color choices associated with fall.”

Real people preferred DALL-E 3

DALL-E 3 won in both of our field tests with a panel of real consumers. In both cases, it produced more realistic images in both of the scenarios presented with a better understanding of the prompt.

From a creator perspective, while Stable Diffusion’s DreamStudio program makes it possible for would-be AI artists to create high-quality images with editing and tweaking tools, DALL-E is better for beginners. It produced detailed, high-resolution imagery that looked and felt like a true Utah sunset.

In the motorcycle test, Stable Diffusion went for a pixel-art style panel representation of multiple colored motorcycles instead of the active race scene called for in the prompt. This could be changed with a bit of prompt engineering and time, but that costs money. Dall-e 3 produced the goods on the first go, so Dall-e 3 gets the win here for ease of use and results.

Even better, the audience agreed that the DALL-E 3 images reflected the prompts more accurately and produced better-looking images overall. If you’re sharing your AI-generated images with a wider audience, then what real people think about them obviously matters. From our field test, DALL-E 3 wins across the board.

The ethics of AI

While both these image generation models give the user the final rights over the images built, the real legal status of these images in corporate use cases isn’t yet established. Some jurisdictions have ruled that AI-generated art cannot be trademarked, for example, which is a problem if you use these tools to build your new logo.

DALL-E 3 also takes steps to protect the likeness of celebrities, politicians, and other famous people, by preventing you from creating images with those as a prompt. Dall-e 3 also prevents users creating explicit, violent, and hateful imagery with its tool.

Is it ethical to an image generator to “create a picture of a soup can in the style of Andy Warhol?” Many artists claim that it isn’t and that it infringes on their creative intellectual property. Both tools allow artists to opt-out of the training tool, but most of these and other AI generators have already been “trained” on art from millions of artists, famous and not famous alike.

Using AI image generators undoubtedly removes work from graphic designers and artists. Meanwhile, proponents of the software say this democratizes art and makes it more accessible to more people. The ethics of AI as it replaces human labor is a long and difficult conversation that corporations, societies, and legislators will be engaged in for a long time to come.

Add a human touch to your AI-generated images

AI-generated images can be a great way to help your team spark ideas, streamline asset creation, get feedback on creative direction, and create genuinely unique images for your marketing and products. But it’s essential to use these tools with care and be mindful of how you use your images.

Don’t neglect the importance of adding a one-of-a-kind human touch on AI-generated assets. Asking real people what they think about your images (using a consumer insights tool like PickFu) not only ensures that your audience will like your images, but provides you with specific feedback that you can use to enhance and improve them.You can sign up for PickFu to run polls and ask for feedback on your AI-generated images.